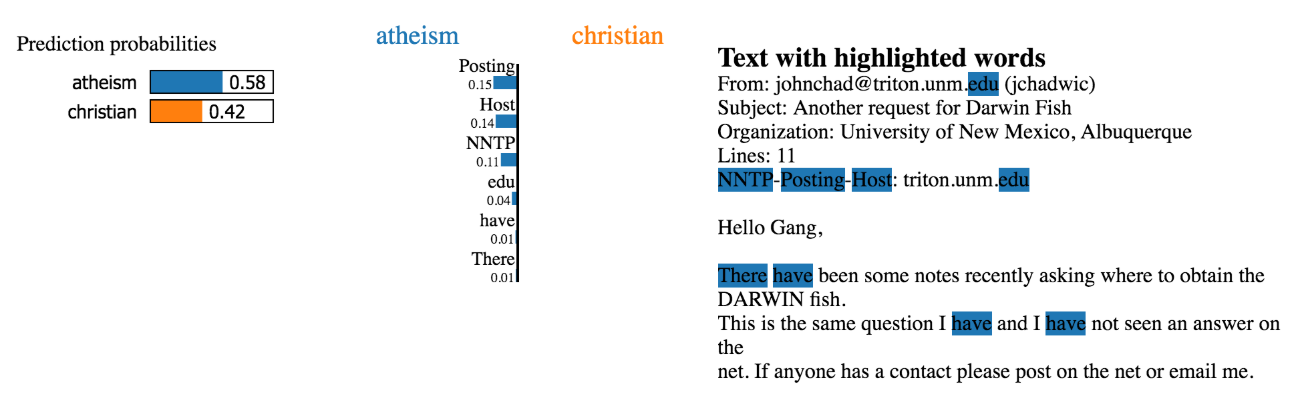

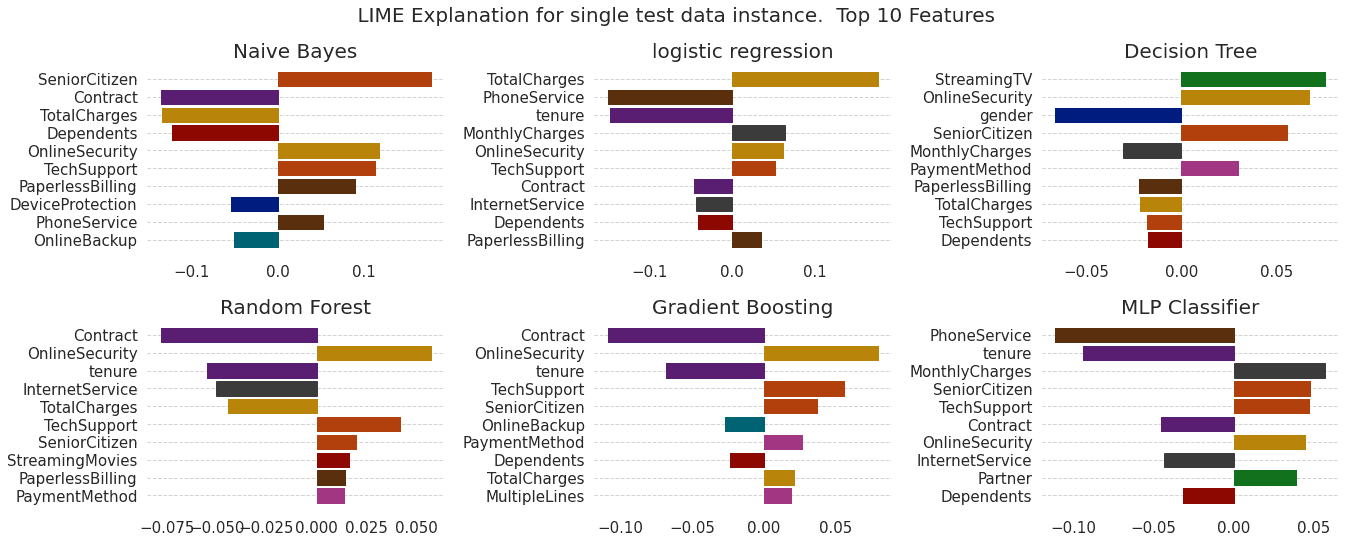

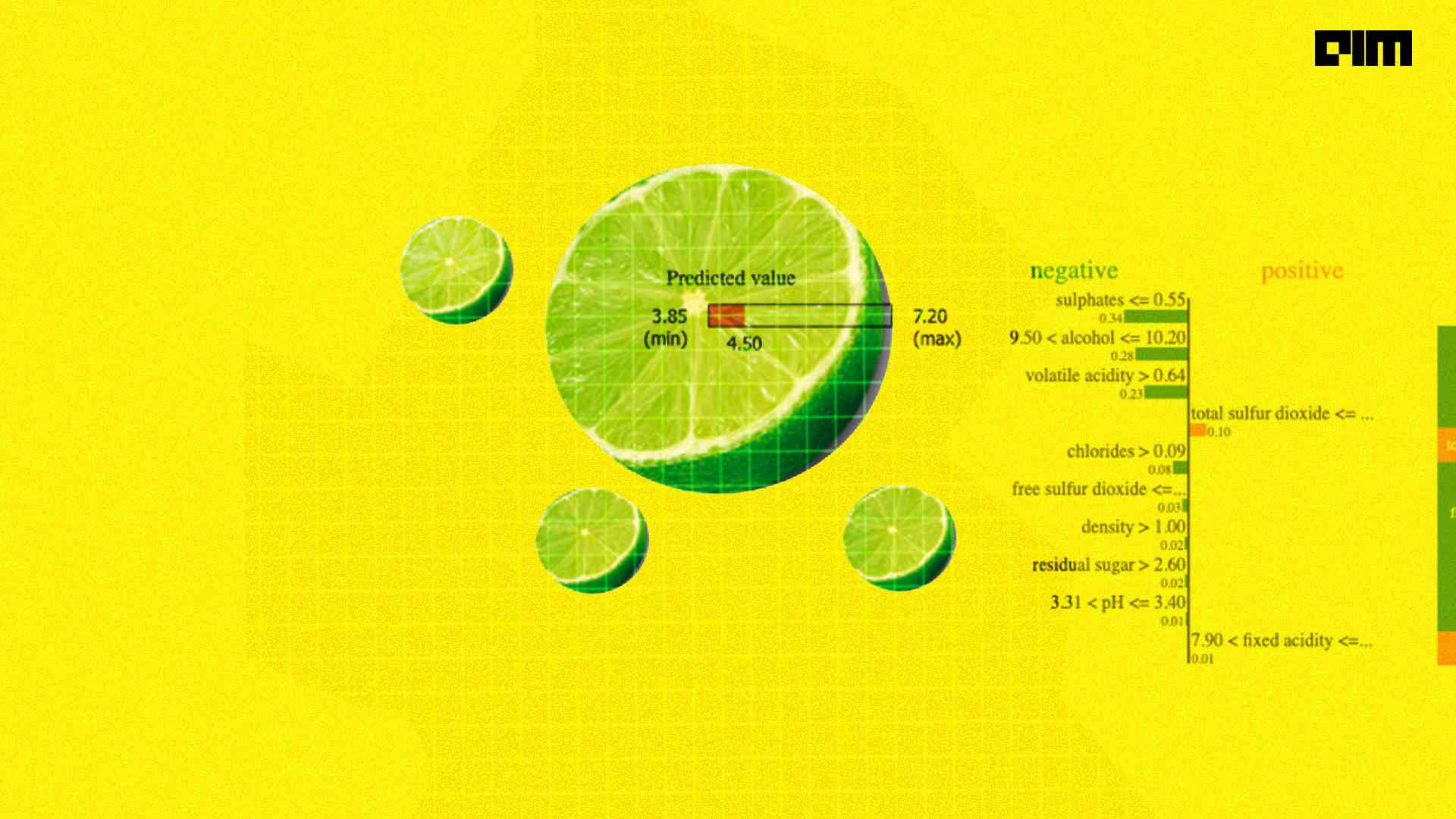

B: Feature importance as assessed by LIME. A positive weight means the... | Download Scientific Diagram

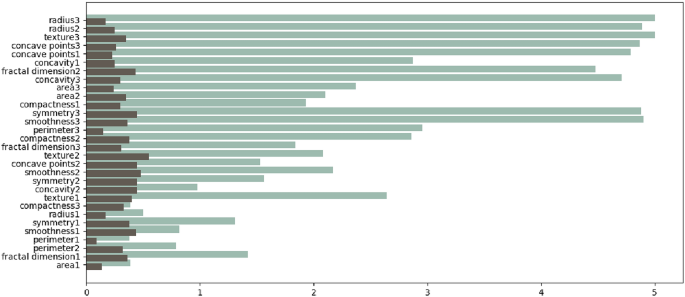

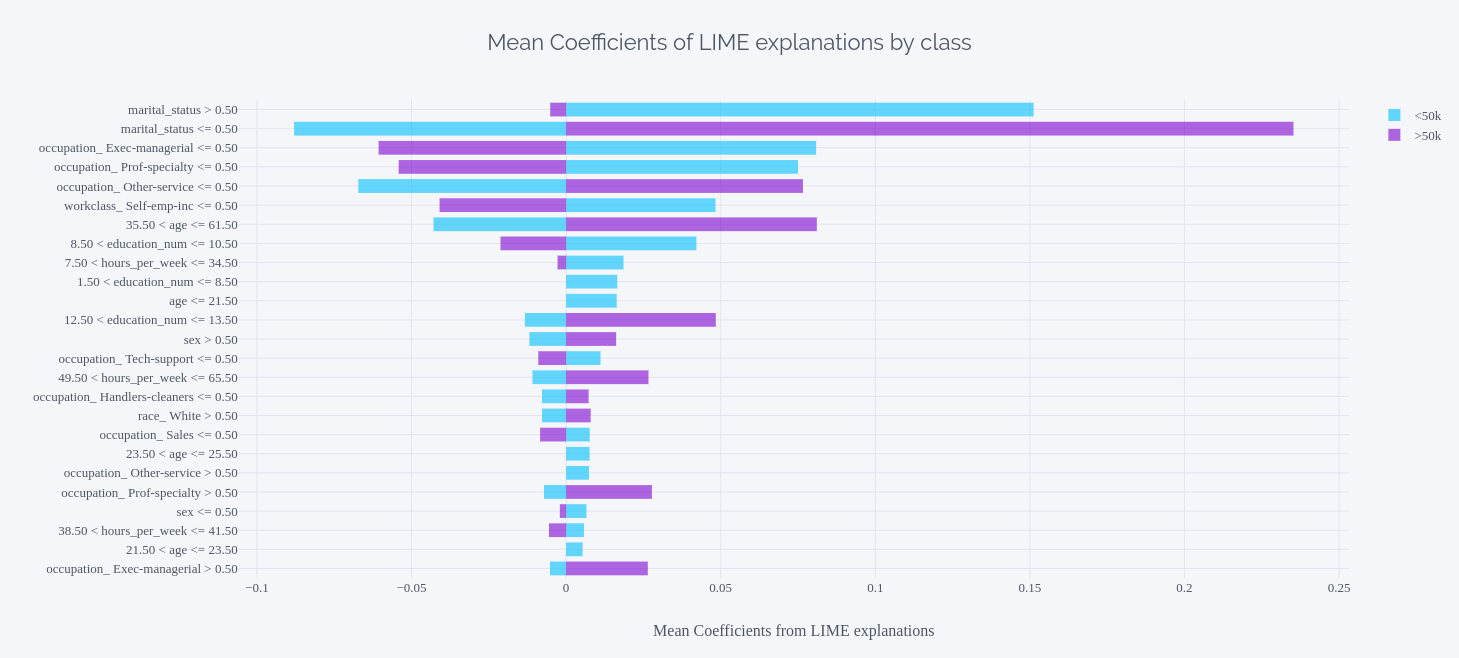

machine learning - How to extract global feature importances of a black box model from local explanations with LIME? - Cross Validated

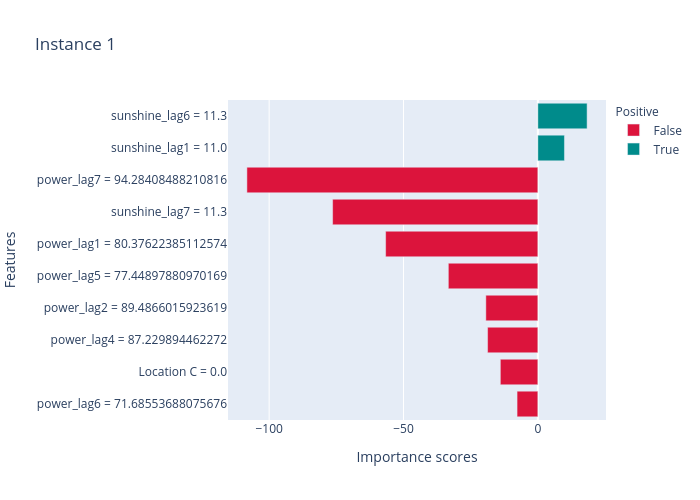

Applied Sciences | Free Full-Text | Specific-Input LIME Explanations for Tabular Data Based on Deep Learning Models

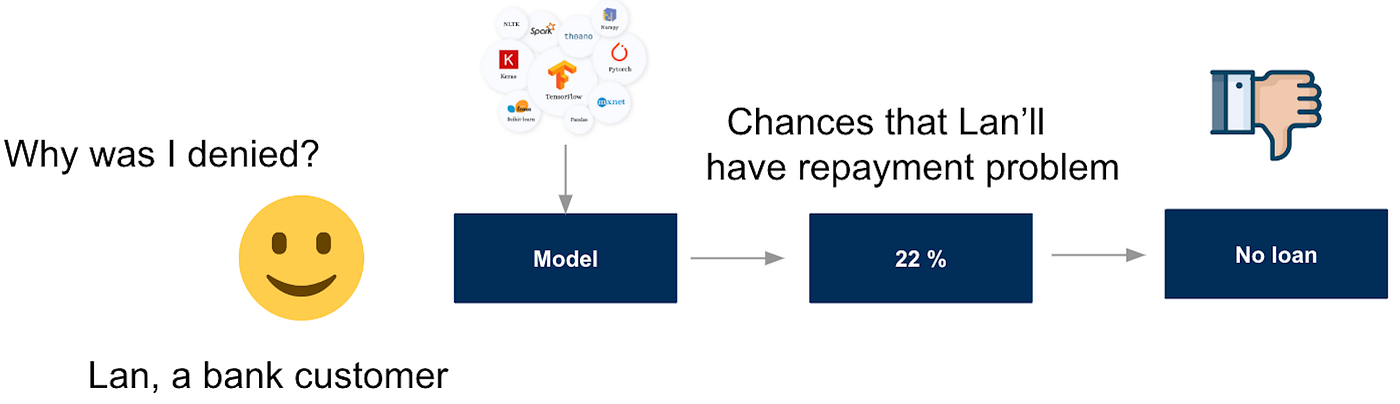

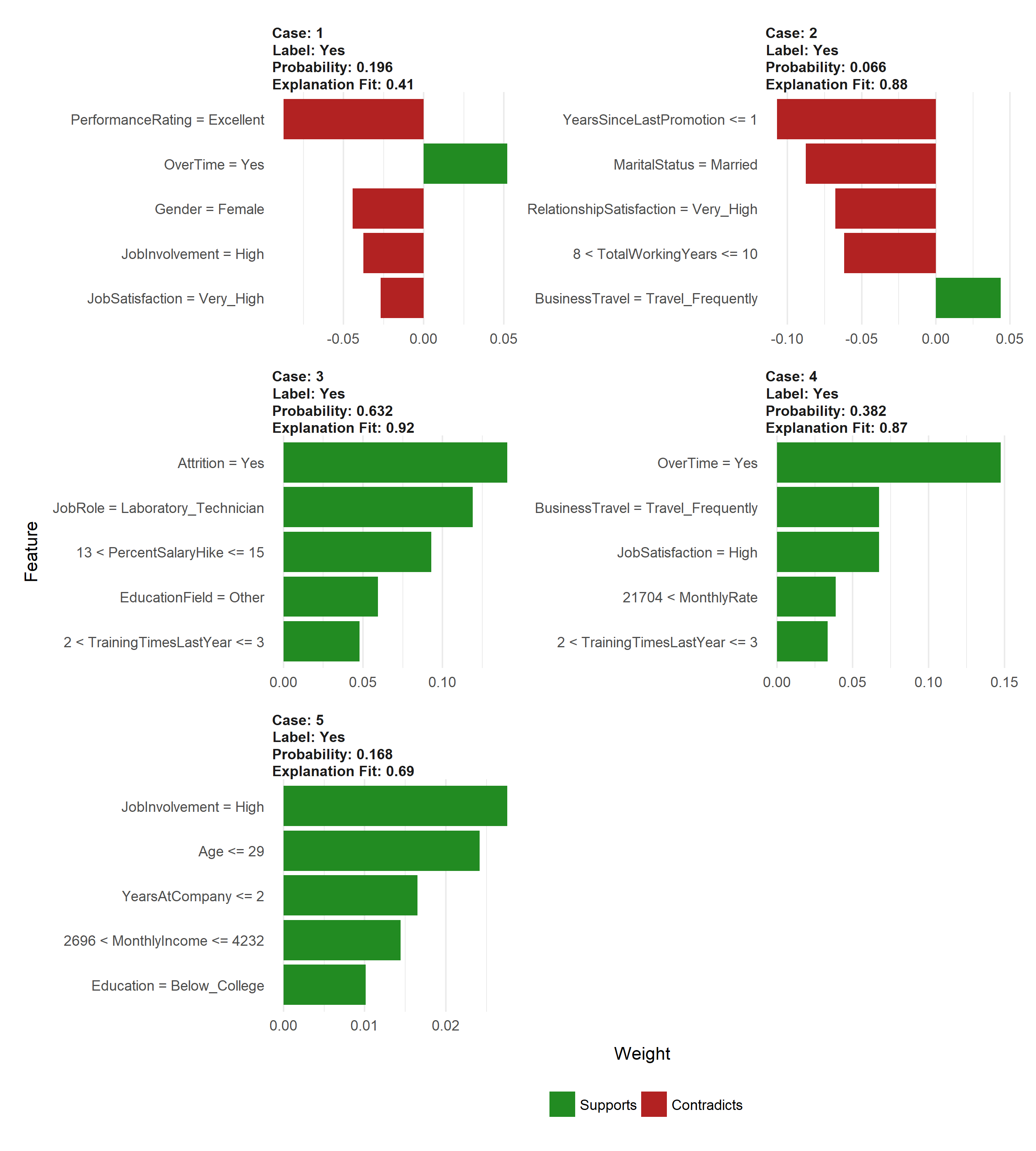

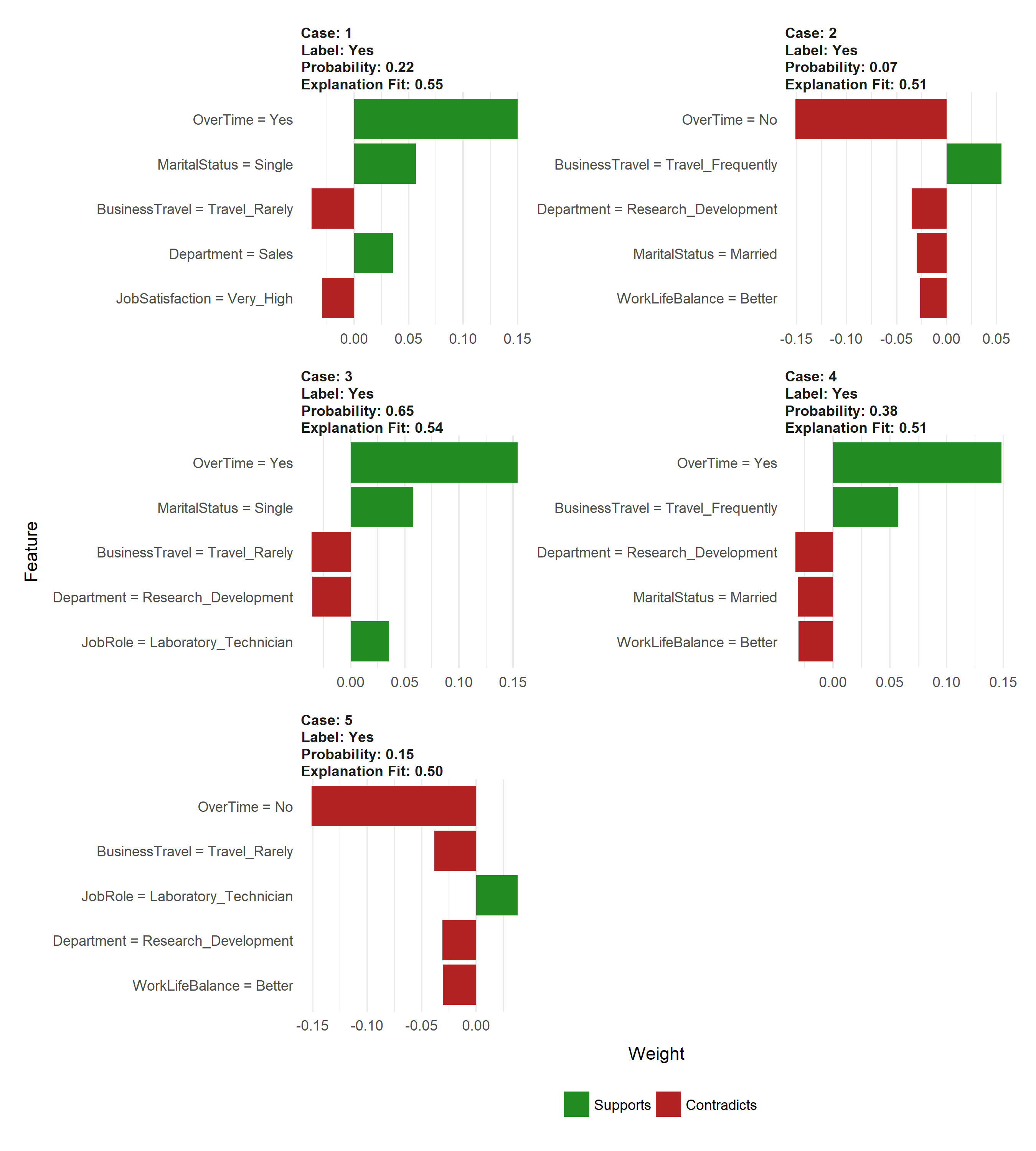

LIME: How to Interpret Machine Learning Models With Python | by Dario Radečić | Towards Data Science

![How to Use LIME to Interpret Predictions of ML Models [Python]? How to Use LIME to Interpret Predictions of ML Models [Python]?](https://storage.googleapis.com/coderzcolumn/static/tutorials/machine_learning/lime_3.jpg)